- About Me

- Courses/Projects

- Programming

- Research

- Resume

- Useful Links and Comments

- Financial Models

- Design Patterns

- Reading List

- News

- 心情驿站

I spent quite a few years studying engineering and was trained to use practical methods for solving the real world problems. However, I became insatiated with just the know-how, I was always self motivated to know what happened behind the scenes. I started auditing a few math courses offered in the math faculty and have been deeply enthralled by the beauty of mathematical optimization and mathematical finance. To my greatest honor, my supervisor, Prof. Yuying Li gave me an opportunity to do studies and research in the area of Computational Finance in her seasoned supervision, in pursuit of my second master's degree at the University of Waterloo. I am so grateful for having being given this great opportunity to take/audit various courses taught by so many wonderful professors: to name a few, Prof. Peter Forsyth, Prof. Carole Bernard, Prof. Christopher Small, Prof. Levent Tuncel, Prof. Henry Wolkowicz, Prof. Stephen A. Vavasis, Prof. Nick Wormald etc.

During my studies at the University of Waterloo, I got to know many gifted colleagues and leared so much from them. I always hold the tenet that learning process is a lifelong process so I need to work hard to overcome my weakness and keep up with the fast pace of those talents. Some of my best friends, Wei Zhou(PhD at UW), Jun Chen (PhD at UW), Tiantian Bian (Microsoft), Xiangxian Ying (PhD, Professor), Shilei Niu (PhD at UW), Jenny Jin (Capable California Girl), Vris Cheung (PhD at UW), Leo Liu (Manulife Financial) and many more, have been generously giving me every support and encouragement whenever in need.

After graduation, I started my career as a software developer. It is very exciting to know many useful tools in industry to make what we believe happen in the real word. I got the exposure to CSV to XML converter to Castor Object to Domain Object to the storage and retrieval of Java domain objects via Hibernate's Object/Relational Mapping, Apache Struts for developing Java EE web application, JSTL/Javascript/jQuery for JSP page design, JUnit test in the test-driven development. This period of work experience greatly enhanced my knowledge and skills in dealing with a large credit application and batch program processing system.

Currently I am working at BMO Capital as a technical specialist. I am mostly involved in ETL/Model Conversion for the Stress Testing Framework in accordance with Basel II/III capital accord requirements. My most recent resume in PDF version can be found at this link for 3-page or 2 page version.

- Finance 1: Course Notes and References

- Finance 2: Course Notes and Project

- Finance 3: Course Notes and References

- Stat 901 Probability Theory 1: Course Notes

- Stat 902 Probability Theory 2: Course Notes

- Stat 833 Stochastic Processes: Course Notes

- PMath 354 Measure Theory and Fourier Analysis: Course Notes

- CS 860 Geometric Data Structures: Course Notes and Project

- ECE610 Broadband Networks: Project

- ECE710 Space Time Coding for Wireless Communication:Course Notes and Project

- CO 671 Semidefinite Optimization: Course Notes and Reports

- CO 663 Convex Optimization and Analysis: Course Notes

- CO 739 Random Graph: Course Notes

- CO 759 Topics in Discrete Optimization: Algorithmic Game Theory Course Notes

- CO 769 Topics in Continuous Optimization: Compressive Sensing Course Notes

- CO 778 Portfolio Optimization: Course Notes

Finance One

This course provides the current paradigms in the theory of finance and the supporting empirical evidence. Prof. David Saunders introduced the basic concepts of the field, with an emphasis on the economic principles that underlie the main models. Discrete time models were mainly explained. Pricing and hedging of financial assets, portfolio selection, risk management and equilibrium models for asset valuation were examined. The electronic version of the course notes is available upon request.

Finance Two

Finance 2, taught by Prof. Carole Bernard is a subsequent course to finance 1. It mainly covers the continuous-time finance models, e.g, Black-Scholes-Merton framework, Fixed Income models including Vasciek, CIR, Hull-White etc. Ito's lemma, change of measure (Radon Nikodym Theorem, Girsanov Theorem), change of numeraire techniques have been thoroughly investigated and applied to pricing the various kinds of financial derivatives, e.g., call on call, exchange option etc. The link to the course notes is here.

As for the project, I worked on the derivation of the pricing formulas for global cap and monthly sum cap under Black-Scholes' Framework. Thanks to Ms. Jenny Jin for providing the Matlab code for the verification of the results using FFT method. The project report can be found here.

Finance Three

Finance 3 is more on the research side whileas it mainly covers 3 big chunks. As for topic 1, portfolio optimization is investigated in depth. Efficient portfolios: the efficient frontier, the capital market line, Sharpe ratios and threshold returns. Practical portfolio optimization: short sales restrictions target portfolios, transactions costs. Quadratic programming theory. Special purpose quadratic programming algorithms for portfolio optimization. Risk management in a quantitative framework including stress testing are represented in the second part. In particular, Bayesian net is a very power tool, which indeed has already been applied in many areas to estimate/calculate the risk quantile (LGD) in a systemically correlated setting. The third part is dedicated to some more advanced finance models, Levy's process etc. (in year 2009). The electronic version of the course notes including midterm answers are available upon request.

Probability Theory 1

Probability Theory 1 is a must-take course on your course list if you really want to become a quant who wants know-why in addition to know-how. Sigma-algebra and set theory are introduced at a glimpse. Monotone Convergence Theorem, Central Limit Theorem and Conditional Probability/Expectation are rigorously explained here. The link to the course notes is here.

Probability Theory 2

Probability Theory 2 is an advanced math course to establish the main principles of stochastic calculus within the simplest setting of stochastic integration with respect to continuous semimartingales. The course mainly covers discrete-parameter martingales, continuous-parameter martingales, stochastic integration of progressively measurable integrands and representation of local martingales as stochastic integrals. This course content is deep and prof. Andrew J. Heunis knows this subject really well and he always tried to explain the meaning behind the abstract formulas. The link to the course notes is here. The official course notes is also available in PDF.

STAT 833

Random walks, renewal theory and processes and their application, Markov chains, branching processes, statistical inference for Markov chains are covered in this course. The link to the course notes is here. Prof. Christopher Small is an excellent instructor in teaching. I audited this course and Probability Theory 1 taught by him. He knows exactly how to communicate complex ideas within the scope of students' knowledge base.

Pure Math 354

It is always good to take pure math courses whenever you want to get a deep and comprehensive understanding of the topic. Lebesgue measure on the line, the Lebesgue integral, monotone and dominated convergence theorems, Lp-spaces: completeness and dense subspaces. Separable Hilbert space, orthonormal bases. Fourier analysis on the circle, Dirichlet kernel, Riemann-Lebesgue lemma, Fejer's theorem and convergence of Fourier series. This is a very good course for non-math major students to ramp up measure theory. The course notes is available here.

Computational Geometry

This course covers selected topics on data structures in low-dimensional computational geometry including “Orthogonal range searching”, “Point location”, “Nonorthogonal range searching”, “Dynamic data structures”, “Approximate nearest neighbor search”. The link to the course notes is here.

In particular, my course project presentation on “Maximum Independent Set of Rectangles” is also provided here.

Abstract: The maximum independent set of rectangles problem has lots of application from map labelling to data mining in practice. A collection of objects is called independent if no pair of objects in the collection intersect. The unweighted MISR problem is designed to find an independent set with maximum cardinality among all independent sets. The MISR problem is just a special case of Maximum Independent Set (MIS) problem in graph theory. However, even for MISR, it has been proved to be an NP-hard problem. Hence researchers are focused on finding good approximation algorithms for this problem. The current best known algorithm for general MIS problem yields O(n/(log n)^2)-approximation factor. For MISR, O(log n)-factor algorithm has been well established by several researchers independently. The contribution of Parinya and Julia’s paper lies in that they proposed an O(log log n)-approximation algorithm for MISR, which for the first time beats the O (log n)-factor barrier. In Chan and Peled’s paper, they used local search method and novel rounding scheme to solve maximum independent set of pseudo-disks, which give constant approximation algorithms for both unweighted and weighted cases. In the appendix, they gave an O(logn/loglogn)-approximation algorithm for MISR problem.

Broadband Networks

This course is concerned with the fundamentals of broadband communication networks including network architecture, Switch fabrics, design methodology; traffic management, connection admission control (CAC), usage parameter control (UPC), flow and congestion control; capacity and buffer allocation, service scheduling, performance measures, performance modeling and queueing analysis. The instructor, Prof. Ravi. R. Mazumdar is very math oriented. Intensive mathematical derivations were introduced in this course. One course project was to ask students to implement different models of queues and compare the performance. The project itself was not very difficult, but one needs to be a bit more extra thinking. I also provided some mathematical results on some models and a more robust pseudo random number generating algorithm after a literature survey so I was the only one who got full marks on this project. The manuscript for this project can be found here.

Space-Time Coding

I audited this course, which was taught by Prof. Murat Uysal , just because I was very interested in coding theory. This course mainly covered “Error Probability Analysis”, “Space-Time Trellis Coding (STTC)”, “Space-Time Block Coding (STBC)”, “Multiple-input Multiple-output (MIMO) Information Theory”. I did both two written assignments/projects on implementing the space-time coding algorithms and evaluated the performance. I got 98/100 for these two assignments. The write-ups can be found at the following links Simulation of Random Fading Channels and STTC Using Convolutional Codes.

Semidefinite Optimization

I have complied the courses notes in Section 1: Basics and Section 2: Combinatorial Applications in PDF format. Prof. Levent Tuncel gets this monograph published very recently at Amazon Web Link. This is a very good book which I highly recommend to those who want to get a deep understanding of semidefinite programming and its applications to many practical problems including Max Cut, Geometric Representation of Graphs and Lift-and-Project procedures for Combinatorial Optimization problems. I referred in my thesis to Chapter 4 in this monograph for details on how Primal-Dual Interior-Point Method works.

Convex Optimization and Analysis

An introduction to the modern theory of convex programming, its extensions and applications. Structure of convex sets, separation and support, set-valued analysis, subgradient calculus for convex functions, Fenchel conjugacy and duality, Lagrange multipliers, minimax theory. Algorithms for condifferentiable optimization. Lipschitz functions, tangent cones and generalized derivatives, introductory non-smooth analysis and optimization. The course notes can be found here.

Random Graphs

This course is deep. In the last few lectures, a very difficult theorem “Large random d-regular graphs are almost always Hamiltonian (for d at least 3)” was proved step by step. I always remembered the moment spent with Dr. Zhou Wei going through every detail of the proof for 12 hours. The course notes can be found here.

Topics in Discrete Optimization: Algorithmic Game Theory

This course was taught by Prof. Chaitanya Swamy. Algorithmic game theory applies algorithmic reasoning to game-theoretic settings. A prototypical motivating example for the problems we will consider is the Internet, which is a fascinating computational artifact in that it was not designed by any one central authority, or optimized for one specific purpose, but rather emerged from the interaction of several entities, such as network operators, ISPs, users, in varying degrees of coordination and competition. This course will investigate a variety of questions that arise from looking at problems (often classical optimization problems) from this point of view. We will examine, in part, algorithmic issues in games, and in part, algorithmic problems that arise in settings with strategic players. The course notes can be found here and the course web site is here.

Topics in Continuous Optimization: Compressive Sensing

This is a very good course taught by Prof. Stenphen A. Vavasis. Compressive sensing was introduced independently by Candes, Romberg and Tao (2006) and Donoho (2006) and has attracted a considerable amount of attention from the signal processing, statistical, computer science and optimization communities. An earlier paper by Gilbert, Guha, Indyk, Muthukrishnan and Strauss (2002) also anticipated the results. The main result in this field states that a signal (i.e., a vector), if it is sufficiently sparse, can be recovered exactly from its inner product with a small number of random vectors. The recovery algorithm is L1 minimization, which is equivalent to linear programming. In general, recovery of the sparsest solution to linear equations is NP-hard, but in this special (and practically applicable) case, recovery can be solved in polynomial time. Specialized algorithms for l1 minimization have been around for many years in the optimization community. In the statistics community, L1 minimization is known as a data-fitting technique that is sometimes more robust than linear least-squares and is closely related to a technique called Lasso regression. These two original papers have led to dozens of follow-up works. The course notes can be found here and the course web site is here.

Portfolio Optimization

Basic optimization: quadratic minimization subject to linear equality constraints. Efficient portfolios: the efficient frontier, the capital market line, Sharpe ratios and threshold returns. Practical portfolio optimization: short sales restrictions target portfolios, transactions costs. Quadratic programming theory. Special purpose quadratic programming algorithms for portfolio optimization: today's large investment firms expect to solve problems with at least 1000 assets, transactions costs and various side constraints in just a few minutes of computation time. This requires very specialized QP algorithms. An overview of such algorithms will be presented with computational results from commercial problems. The efficient frontier, the capital market line, Sharpe ratios and threshold returns in practice. The simplified course notes can be found here.

- One Day Coding Project: Properties Server in JAVA

- Addepar Ant Project: it is worth invesitigating if time allowed

- Iterative Tree Traversal in C/C++

- Recursively Reverse Singly Linkedlist in C/C++

- Stack Sort in C/C++

- Print All Combinations of Paired Parentheses in C/C++

- Binary Tress Serialization in C

- Test a given string is the interleaved combination of other two given strings (JAVA) March 4, 2012

- Setup sibling links for a tree (C/C++) March 4, 2012

- Print out all mutation paths using #(Edit Distance) steps (C/C++) March 7, 2012

- Compute # of places a knight can visit (Java) March 9, 2012

- Spirally print out actresses names in C/C++ March 16, 2012

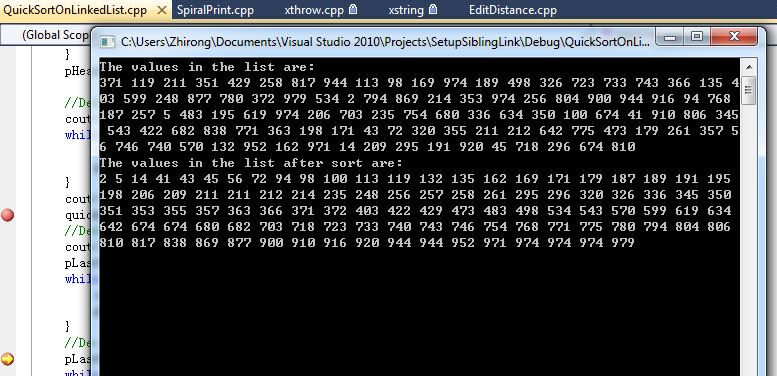

- Quicksort Singly Linked List in C/C++ March 20, 2012

- Naive Approach for deciding identical BSTs in C/C++ April 4, 2012

- Recursion Approach for deciding identical BSTs in Java April 5, 2012

- A simple in-place circular shift of char string in C/C++ April 5, 2012

- Unidirectional Jump game: A naive approach using Dijkstra in C/C++ April 7, 2012

- Unidirectional Jump game: A Greedy approach in C/C++ April 7, 2012

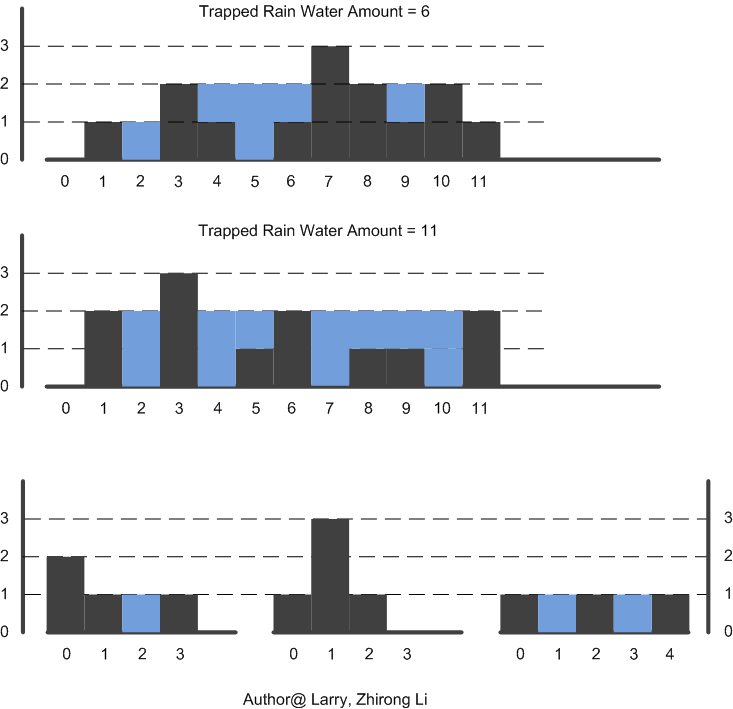

- Trapping Rain Water: A bi-directional scanning approach in C/C++ April 14, 2012

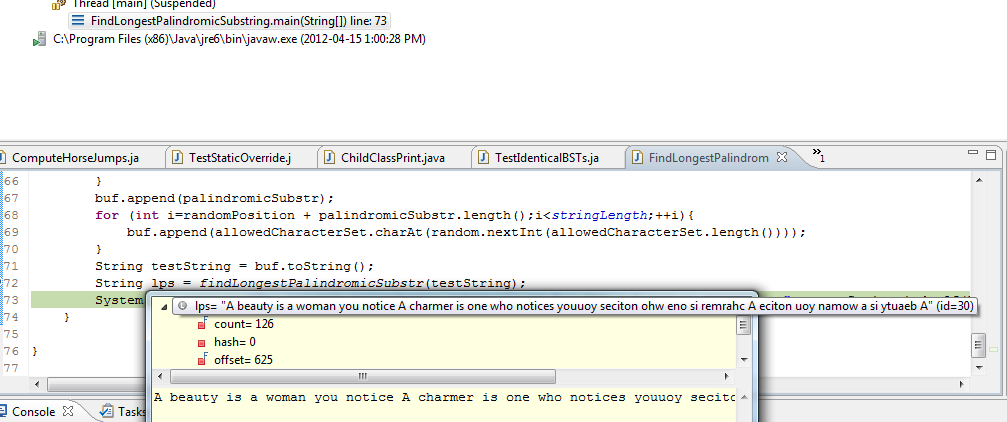

- Longest Palindromic Substring: A suffix sort based approach in Java April 15, 2012

- Find the celebrity in O(N) in C++ April 18, 2012

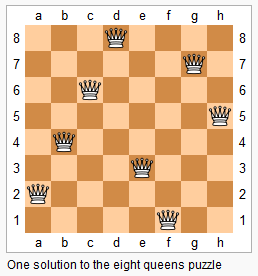

- N-Queens Problem in Java April 18, 2012

- O(N)-time finding the longest Palindromic substring: Manacher's Algorithm in C++ April 21, 2012

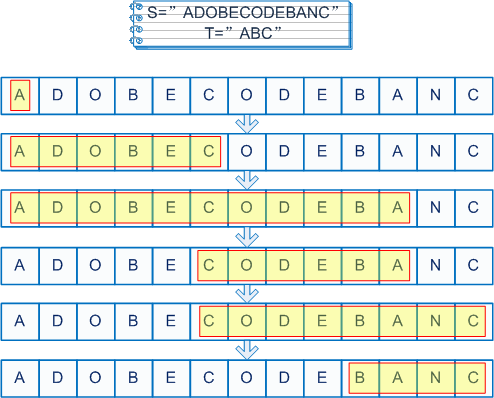

- Finding the minimum window that contains the given character set in O(N)-time Java April 21, 2012

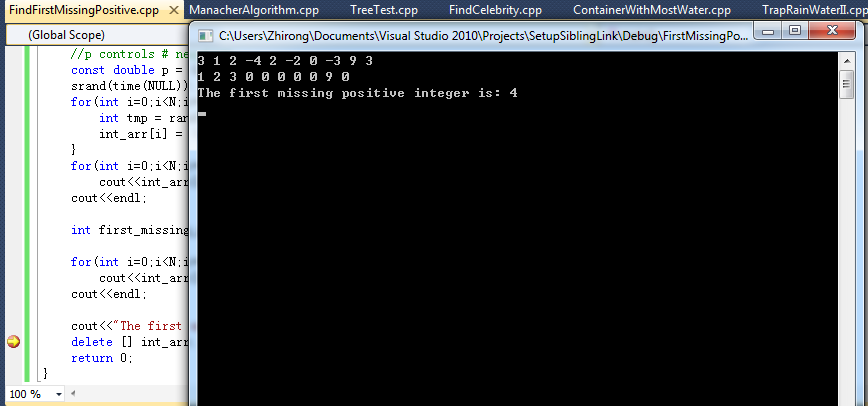

- Find the first missing positive integer in O(N)-time with O(1)-space (C/C++) April 22, 2012

- Sort 3-colored balls in O(N)-time with O(1)-space (Java) April 23, 2012

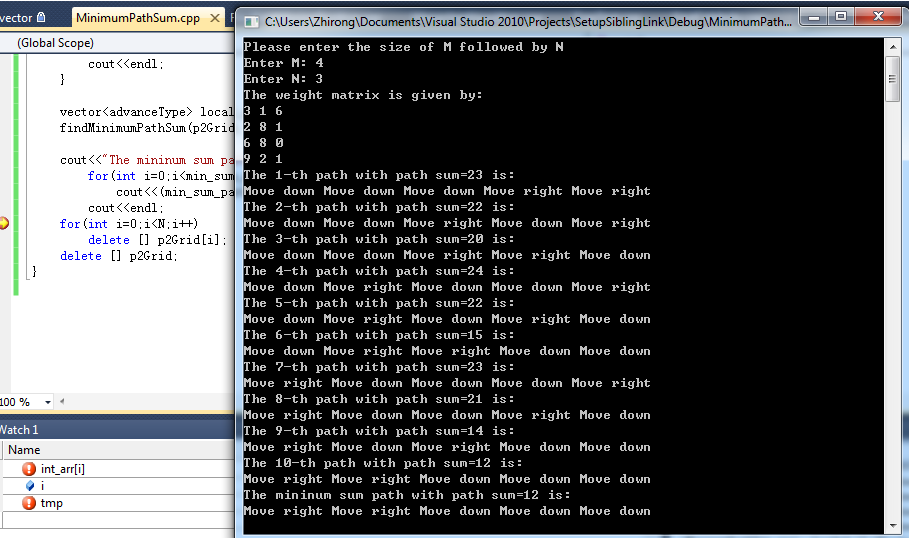

- Minimum Path Sum: recursion approach with backtracking (C/C++) April 23, 2012

- Minimum Path Sum: O(N*M)-DP approach (C/C++) April 24, 2012

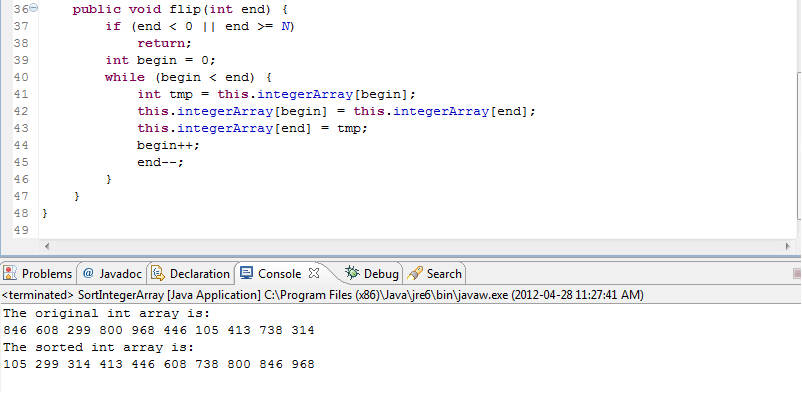

- Flip sort on an integer array in Java April 28, 2012

- Brain teaser: Move guests in/out ball room in C/C++ April 28, 2012

- Tough Question (Open): Find the positions of milestones April 28, 2012

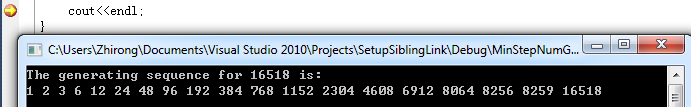

- Brain teaser: Find the positive integer generating sequence using factoring in C/C++ May 2nd, 2012

- Judge if a given string can be scrambled from another given string in C/C++ May 6th, 2012

- DP approach: Judge if a given string can be scrambled from another given string in C/C++ May 7th, 2012

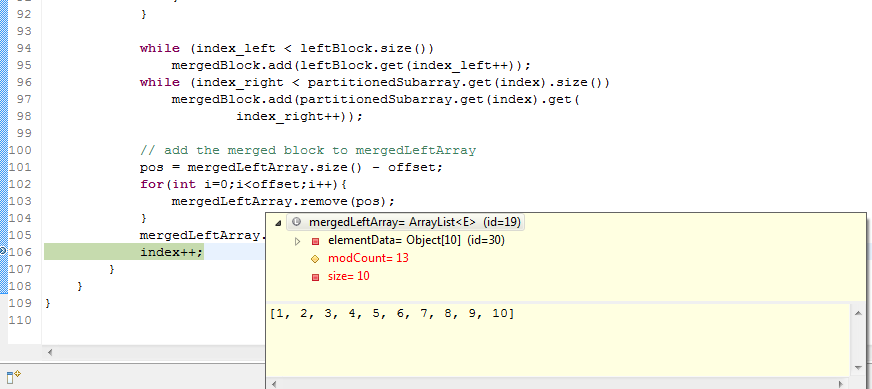

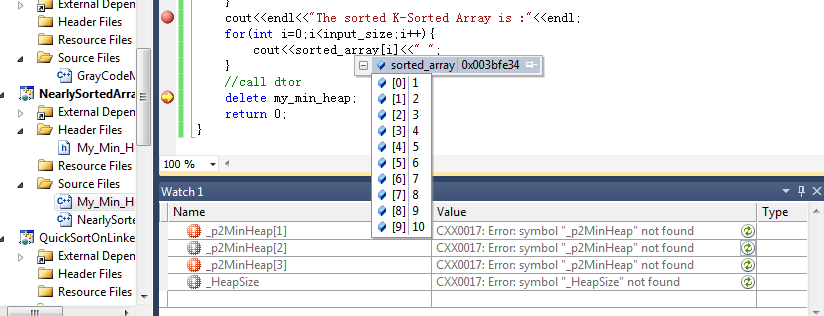

- Approach 1: Sort nearly K-sorted array in O(NlogK)-time in Java May 12th, 2012

- Approach 2: Sort nearly K-sorted array in O(NlogK)-time in C/C++ May 13th, 2012

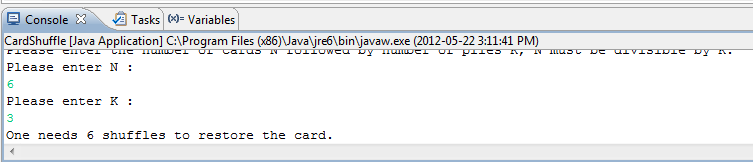

- Find the time period of the shuffling process in Java May 22nd, 2012

- Find path on a binary tree Java Oct 07, 2012

- Format data in CSV in Java Oct 18, 2012

- Grundy Number and Stone Piles Game in C++(referenced), April 15, 2013

- Extract FX Rate in SAS Macro Program, May 14, 2013

- Tricky Group By Statement in SAS SQL, May 14, 2013

- Validating a given column in all SAS libraries, May 27, 2013

- Specific Column Length Check in all SAS libraries, May 27, 2013

Property Service

Write a socket service that maintains a central collection of shared properties for a large distributed system. It should accept telnet-style connections (and will be tested using a telnet client). The service should be able to support a large number of clients concurrently. The service should be a Java application that can be started from the command line as follows: java PropertiesService [port number] If the optional port number is omitted, the service should default to port 4444. If the port is improperly specified (i.e., cannot be interpreted as an integer) or if unrecognized arguments are provided, the application should fail with an appropriate error message. The protocol is text-based and has three commands: set, get, and clear. The following table shows the command syntax and the required responses. Arguments are separated by whitespace (other than a newline); commands and responses are terminated by a newline.

The source code, report and screenshots can be found here.

Ant Project

The instructions for this project can be found at http://addepar.com/challenge.php. I wrote an email to the people at Addepar and realized that this problem was indeed very challenging. While it is a good exercise if you have time to ponder on. The project jar lib can be found here.

Iterative Tree Traversal

#include <iostream>

#include <stdlib.h>

#include <stack>

#include <queue>

#include <string>

#include <sstream>

using namespace std;

class NodeClass{

public:

int nodeValue;

NodeClass * leftPtr;

NodeClass * rightPtr;

NodeClass(int initializationValue){

this->nodeValue=initializationValue;

this->leftPtr=NULL;

this->rightPtr=NULL;

}

};

void IterativePreOrderTraversal(NodeClass * rootPtr){

stack<NodeClass *> nodeStack;

NodeClass * currPtr;

nodeStack.push(rootPtr);

while(nodeStack.size()!=0){

currPtr=nodeStack.top();

nodeStack.pop();

if (currPtr->rightPtr!=NULL)

nodeStack.push(currPtr->rightPtr);

if (currPtr->leftPtr!=NULL)

nodeStack.push(currPtr->leftPtr);

//print out the current node value

cout<<"Node value: "<<currPtr->nodeValue<<endl;

}

}

void IterativeInOrderTraversal(NodeClass * rootPtr){

//In-order traversal using iterative approach

NodeClass * currPtr=rootPtr;

stack<NodeClass *> nodeStack;

for(;;){

//keep pushing left child into stack

if (currPtr!=NULL){

nodeStack.push(currPtr);

currPtr=currPtr->leftPtr;

continue;

}

if (nodeStack.size()==0)

return;

// visit the current node

currPtr=nodeStack.top();

nodeStack.pop();

cout<<"Node value: "<<currPtr->nodeValue<<endl;

// visit the right child

currPtr=currPtr->rightPtr;

}

}

/*************************************************************************************

We use a prev variable to keep track of the previously-traversed node. Let’s assume

curr is the current node that’s on top of the stack. When prev is curr’s parent,

we are traversing down the tree. In this case, we try to traverse to curr’s left child

if available (ie, push left child to the stack). If it is not available, we look at

curr’s right child. If both left and right child do not exist (ie, curr is a leaf node),

we print curr’s value and pop it off the stack.

If prev is curr’s left child, we are traversing up the tree from the left. We look at

curr’s right child. If it is available, then traverse down the right child (ie, push right

child to the stack), otherwise print curr’s value and pop it off the stack.

If prev is curr’s right child, we are traversing up the tree from the right. In this case,

we print curr’s value and pop it off the stack.

*******************************************************************************************/

void IterativePostOrderTraversal(NodeClass *rootPtr) {

if (!rootPtr) return;

stack<NodeClass*> nodeStack;

nodeStack.push(rootPtr);

NodeClass *prev = NULL;

while (!nodeStack.empty()) {

NodeClass *curr = nodeStack.top();

if (!prev || prev->leftPtr == curr || prev->rightPtr == curr) {

if (curr->leftPtr)

nodeStack.push(curr->leftPtr);

else if (curr->rightPtr)

nodeStack.push(curr->rightPtr);

} else if (curr->leftPtr == prev) {

if (curr->rightPtr)

nodeStack.push(curr->rightPtr);

} else {

cout << "Node value: "<< curr->nodeValue << endl;

nodeStack.pop();

}

prev = curr;

}

}

/*************************************************************************************

1. Push the root node to the first stack.

2. Pop a node from the first stack, and push it to the second stack.

3. Then push its left child followed by its right child to the first stack.

4. Repeat step 2) and 3) until the first stack is empty.

5. Once done, the second stack would have all the nodes ready to be traversed in post-order.

Pop off the nodes from the second stack one by one and you?re done.

**************************************************************************************/

void postOrderTraversalIterativeTwoStacks(NodeClass *rootPtr) {

if (!rootPtr) return;

stack<NodeClass*> s;

stack<NodeClass*> output;

s.push(rootPtr);

while (!s.empty()) {

NodeClass *curr = s.top();

output.push(curr);

s.pop();

if (curr->leftPtr)

s.push(curr->leftPtr);

if (curr->rightPtr)

s.push(curr->rightPtr);

}

while (!output.empty()) {

cout <<"Node value: "<< output.top()->nodeValue <<endl;

output.pop();

}

}

void CreateTree(NodeClass ** rootPtr){

NodeClass* tmp0= new NodeClass(0);

NodeClass* tmp1=new NodeClass(1);

NodeClass* tmp2=new NodeClass(2);

tmp0->leftPtr=tmp1;

tmp0->rightPtr=tmp2;

NodeClass* tmp3=new NodeClass(3);

NodeClass* tmp4=new NodeClass(4);

NodeClass* tmp5=new NodeClass(5);

tmp1->leftPtr=tmp3;

tmp1->rightPtr=tmp4;

tmp2->rightPtr=tmp5;

*rootPtr=tmp0;

// tmp1,..., tmp5 local variables will be deleted after terminiation, but the tree structure remains

}

void BFSTraversal(NodeClass *rootPtr){

queue<NodeClass* > nodeQueue;

nodeQueue.push(rootPtr);

while(!nodeQueue.empty()){

NodeClass *currPtr = nodeQueue.front();

nodeQueue.pop();

cout <<"Node value: "<< currPtr->nodeValue <<endl;

if (currPtr->leftPtr)

nodeQueue.push(currPtr->leftPtr);

if (currPtr->rightPtr)

nodeQueue.push(currPtr->rightPtr);

}

}

void DeleteTree(NodeClass *rootPtr){

if (rootPtr!=NULL){

DeleteTree(rootPtr->leftPtr);

DeleteTree(rootPtr->rightPtr);

delete rootPtr;

}else

return;

}

Recursively Reverse Singly Linkedlist

#include <iostream>

#include <stdlib.h>

using namespace std;

class Node{

public:

int nodeValue;

Node* nextPtr;

Node(int v){

this->nodeValue=v;

this->nextPtr=NULL;

}

};

void PrintLinkedList(Node* beginPtr){

while(beginPtr!=NULL){

cout<<beginPtr->nodeValue<<" ";

beginPtr=beginPtr->nextPtr;

}

cout<<endl;

}

void ReverseLinkedList(Node *startPtr){

if (startPtr->nextPtr!=NULL){

ReverseLinkedList(startPtr->nextPtr);

startPtr->nextPtr->nextPtr=startPtr;

}

}

void DeleteLinkedList(Node* iterPtr){

Node* tmpPtr=iterPtr;

while(iterPtr!=NULL){

tmpPtr=iterPtr->nextPtr;

delete iterPtr;

iterPtr=tmpPtr;

}

}

void InsertIntoLinkedList(Node **head, int v)

{

Node *newnode = new Node(v);

newnode->nextPtr = *head;

*head = newnode;

}

void ReverseInPlace(Node **headPtr, Node **tailPtr)

{

Node *pre, *cur, *next;

pre = NULL;

cur=*headPtr;

while(cur!=NULL)

{

next = cur->nextPtr;

cur->nextPtr=pre;

pre=cur;

cur = next;

}

*tailPtr=*headPtr;

*headPtr=pre;

}

bool deleteANode(Node **headPtr, Node **tailPtr, int v)

{

if(*headPtr==NULL)

return false;

if((*headPtr)->nodeValue == v)

{

Node *temp = *headPtr;

*headPtr = (*headPtr)->nextPtr;

delete temp;

if(*headPtr==NULL)

*tailPtr=NULL;

return true;

}

Node *cur = (*headPtr)->nextPtr;

Node *pre = *headPtr;

while(cur!=NULL)

{

if(cur->nodeValue == v)

{

pre->nextPtr=cur->nextPtr;

delete cur;

if(pre->nextPtr==NULL)

*tailPtr=pre;

return true;

}

pre=cur;

cur=cur->nextPtr;

}

return false;

}

//insert value v after a

bool InsertAfter(Node **head, Node **tail, int a, int v)

{

Node *cur = *head;

while(cur!=NULL)

{

if(cur->nodeValue == a)

{

Node *newnode = new Node(v);

newnode->nextPtr=cur->nextPtr;

cur->nextPtr=newnode;

if(newnode->nextPtr==NULL)

*tail=newnode;

return true;

}

cur=cur->nextPtr;

}

return false;

}

int main(int,char*[]){

//build a singly linked list

Node* headPtr=NULL;

/* Node* tmp1=new Node(1);

headPtr=tmp1;

Node* tmp2=new Node(2);

tmp1->nextPtr=tmp2;

tmp1=new Node(3);

tmp2->nextPtr=tmp1;

tmp2=new Node(4);

tmp1->nextPtr=tmp2;

tmp1=new Node(5);

tmp2->nextPtr=tmp1;

tmp1->nextPtr=NULL; */

for(int i=1; i<=5; i++)

InsertIntoLinkedList(&headPtr, i);

//Print out the node values in the linked list, should be 1 2 3 4 5

PrintLinkedList(headPtr);

//Reverse the linked list in recursion

Node* tailPtr=headPtr;

while (tailPtr->nextPtr!=NULL){

tailPtr=tailPtr->nextPtr;

}

ReverseLinkedList(headPtr);

headPtr->nextPtr=NULL;

headPtr=tailPtr;

PrintLinkedList(headPtr);

DeleteLinkedList(headPtr);

headPtr=NULL;

//verify on deletion operation

// PrintLinkedList(headPtr); error headPtr points to unallocated memeory space

}

Sorting using stack

#include <time.h>

void createStack(stack<int>& createStack){

/* initialize random seed: */

srand ( time(NULL) );

const int n=50;

for (int i=0;i<n;i++){

createStack.push(rand()%100);

}

}

void stackSort(stack<int>& opStack,int terminateLevel,int currLevel){

if (currLevel<=terminateLevel)

return;

else{

int x=opStack.top();

opStack.pop();

int newx=opStack.top();

if (x<newx){

opStack.pop();

opStack.push(x);

x=newx;

}

stackSort(opStack,terminateLevel,--currLevel);

opStack.push(x);

}

}

int main(){

stack<int> numberStack;

createStack(numberStack);

int n=numberStack.size();

// sort in ascending order

for (int i=1;i<=n;i++){

stackSort(numberStack,i,n);

}

for (int i=0;i<n;i++){

cout<<numberStack.top()<<endl;

numberStack.pop();

}

}

Print all valid combinations of parentheses

int n=3;

char stk[100];

void printlegal( int ipos, int ileft, int iright)

{

if (ipos==2*n-1)

{

stk[ipos]=')';

for (int i=0; i<n*2; i++)

printf("%c", stk[i]);

printf("\n");

}else if (ileft==iright)

{

stk[ipos] = '(';

printlegal( ipos+1, ileft-1, iright );

}else if (ileft==0)

{

stk[ipos] = ')';

printlegal( ipos+1, ileft, iright-1 );

}else{

stk[ipos] = '(';

printlegal( ipos+1, ileft-1, iright );

stk[ipos] = ')';

printlegal( ipos+1, ileft, iright-1 );

}

}

int main(int argc, char* argv[])

{

printlegal(0, n, n);

return 0;

}

Serialization of BT

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

typedef struct Node{

char val;

struct Node* left;

struct Node* right;

}TreeNode;

void serialize(TreeNode* node){

if (node == NULL){

printf("()");

return;

}

printf("(%c", node->val);

serialize(node->left);

serialize(node->right);

printf(")");

}

TreeNode* deserialize(char* arr, int* offset){

//printf("%c %d \n", arr[*offset], *offset);

if (arr[(*offset)]=='('){

char val = arr[ (*offset) + 1];

*offset = (*offset) + 1;

if (val == ')'){

*offset = *offset + 1;

return NULL;

}else{

TreeNode* n = (TreeNode*) malloc(sizeof(TreeNode));

n->val = val;

//printf("%c %d\n", n->val, *offset);

//initialize

n->left = NULL;

n->right = NULL;

(*offset) = (*offset) + 1;

if (arr[*offset] == ')'){

*offset = (*offset) + 1;

return n;

} else{

TreeNode* left = deserialize(arr, offset);

//printf("left: %c %d\n", n->val, *offset);

n->left = left;

}

//printf("me: %c %d\n", n->val, *offset);

if (arr[*offset] == ')'){

(*offset) = (*offset) + 1;

return n;

}else{

TreeNode* right = deserialize(arr, offset);

//printf("right: %c %d\n", n->val, *offset);

n->right = right;

}

(*offset) = (*offset) + 1;

return n;

}

}

}

int main(){

char* tree = "(a(b(e()())())(c()(d()())))";

int len = strlen(tree);

int* offset=(int*) malloc(sizeof(int));

*offset = 0;

TreeNode* root = deserialize(tree, offset);

printf("done\n");

serialize(root);

}

Test Interleaving of strings

import java.util.Stack;

import org.apache.commons.lang3.StringUtils;

/**

* @author Li, Larry

* Java implementation of testing whether a given string is the interleaved

* combination of two other given strings

*

*/

public class TestInterleaving {

private class CursorLocation{

private int testStringCursor = 0;

private int str1Cursor = 0;

private int str2Cursor = 0;

public CursorLocation(int testStringCursor, int str1Cursor, int str2Cursor ) {

this.testStringCursor = testStringCursor;

this.str1Cursor = str1Cursor;

this.str2Cursor = str2Cursor;

}

/**

* @return

*/

public int getStr1Cursor() {

return str1Cursor;

}

/**

* @return

*/

public int getStr2Cursor() {

return str2Cursor;

}

/**

* @return

*/

public int getTestStringCursor() {

return testStringCursor;

}

}

public static void main(String[] args) {

String testStr = "keeptomorrowworkingwillbeharbetterd";

String strOne = "keepworkinghard";

String strTwo = "tomorrowwillbebetter";

TestInterleaving testInterleaving = new TestInterleaving();

boolean isTestStringInterleaved = testInterleavingRecursion(testStr, strOne, strTwo);

boolean isTestStringInterleavedIter = testInterleavingIteration(testStr, strOne, strTwo,

testInterleaving);

System.out.println("The test string that is the interleaved of two given strings is: "

+ isTestStringInterleaved);

System.out.println("The test string that is the interleaved of two given strings is: "

+ isTestStringInterleavedIter);

}

private static boolean testInterleavingRecursion(String testStr, String str1, String str2){

if (testStr.length()!= (str1.length()+str2.length())){

return false;

} else {

if (testStr.length()==0)

return true;

boolean isFirstCharacterEqualForStr1 = isFirstCharacterEqual(testStr,str1);

boolean isFirstCharacterEqualForStr2 = isFirstCharacterEqual(testStr, str2);

if (isFirstCharacterEqualForStr1 || isFirstCharacterEqualForStr2){

if (isFirstCharacterEqualForStr1 && isFirstCharacterEqualForStr2){

return testInterleavingRecursion(testStr.substring(1), str1.substring(1), str2)

|| testInterleavingRecursion(testStr.substring(1), str1, str2.substring(1));

} else if (isFirstCharacterEqualForStr1){

return testInterleavingRecursion(testStr.substring(1), str1.substring(1), str2);

} else {

return testInterleavingRecursion(testStr.substring(1), str1, str2.substring(1));

}

} else {

return false;

}

}

}

private static boolean isFirstCharacterEqual(String str1, String str2){

if (StringUtils.isNotEmpty(str1) && StringUtils.isNotEmpty(str2)){

return str1.charAt(0) == str2.charAt(0);

} else {

return false;

}

}

private static boolean testInterleavingIteration(String testStr, String str1, String str2,

TestInterleaving testInterleaving){

// The trick is to use stack to track down all possible combinations

if (testStr.length()!= (str1.length()+str2.length())){

return false;

} else {

Stack<CursorLocation> stackTrace = new Stack<CursorLocation>();

int testStrCursor =0;

int str1Cursor = 0;

int str2Cursor= 0;

stackTrace.add(testInterleaving.new CursorLocation(testStrCursor, str1Cursor, str2Cursor));

while (!stackTrace.isEmpty()){

// pop up the cursor location and do the test

CursorLocation cursorLocation = stackTrace.pop();

testStrCursor = cursorLocation.getTestStringCursor();

str1Cursor = cursorLocation.getStr1Cursor();

str2Cursor= cursorLocation.getStr2Cursor();

while (testStrCursor<testStr.length()-1){

boolean isCurrentCharacterEqualForStr1 = false;

boolean isCurrentCharacterEqualForStr2 = false;

if (str1Cursor<str1.length())

isCurrentCharacterEqualForStr1 = testStr.charAt(testStrCursor)

== str1.charAt(str1Cursor);

if (str2Cursor<str2.length())

isCurrentCharacterEqualForStr2 = testStr.charAt(testStrCursor)

== str2.charAt(str2Cursor);

if (isCurrentCharacterEqualForStr1 && isCurrentCharacterEqualForStr2){

stackTrace.add(testInterleaving.new CursorLocation(testStrCursor,

str1Cursor, str2Cursor));

//Deal with the first match with string one, the 2nd case saved to the

//stack for later processing

testStrCursor++;

str1Cursor++;

} else if (isCurrentCharacterEqualForStr1){

testStrCursor++;

str1Cursor++;

} else if (isCurrentCharacterEqualForStr2){

testStrCursor++;

str2Cursor++;

} else {

break;

}

}

// decide if the string is matched to the end

if (testStrCursor == testStr.length()-1){

if (str1Cursor == str1.length()-1){

if (testStr.charAt(testStrCursor) == str1.charAt(str1Cursor))

return true;

} else if (str2Cursor == str2.length()-1){

if (testStr.charAt(testStrCursor) == str2.charAt(str2Cursor))

return true;

}

}

}

return false;

}

}

}

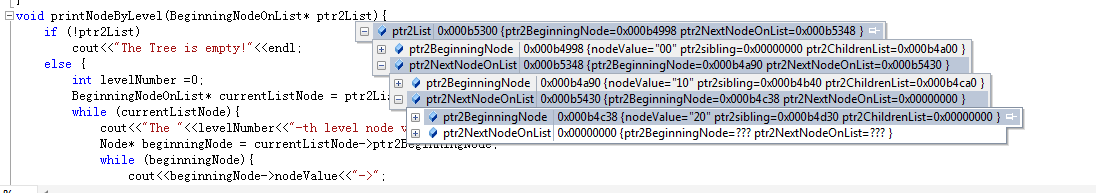

Setup sibling links for a tree (May not be a binary tree)

We use a singly linked list to store the pointer to the first node at each level, once the sibling links are setup, traverse from this first node until meet a null pointer. The given example's linked list looks like this:

#include <iostream>

#include <string>

#include <queue>

using namespace std;

/***********************************************************

* Author: Larry (Zhirong) Li March 4, 2012

* Setup Sibling Link for a tree, the given example is

* (00)

* / \

* (10) (11)

* / | \ / \

* (20)(21)(22) (23)(24)

************************************************************/

class Node;

class ChildrenListNode{

public:

Node* ptr2Child;

ChildrenListNode* ptr2NextChild;

ChildrenListNode(Node* ptr1){

this->ptr2Child =ptr1;

this->ptr2NextChild = NULL;

}

};

class Node{

public:

string nodeValue;

Node* ptr2sibling;

ChildrenListNode* ptr2ChildrenList;

Node(string value){

this->nodeValue = value;

this->ptr2sibling = NULL;

this->ptr2ChildrenList = NULL;

}

};

class BeginningNodeOnList{

public:

Node* ptr2BeginningNode;

BeginningNodeOnList* ptr2NextNodeOnList;

BeginningNodeOnList(Node* ptr){

this->ptr2BeginningNode = ptr;

this->ptr2NextNodeOnList = NULL;

}

};

Node* createSampleTree(){

Node* ptr00 = new Node(string("00"));

Node* ptr10 = new Node("10");

ChildrenListNode* childPtr = new ChildrenListNode(ptr10);

Node* ptr11 = new Node("11");

ChildrenListNode* nextChildPtr = new ChildrenListNode(ptr11);

ptr00->ptr2ChildrenList = childPtr;

childPtr->ptr2NextChild = nextChildPtr;

//Create the child node 20,21,22 for parent node 10

Node* ptr20 = new Node("20");

Node* ptr21 = new Node("21");

Node* ptr22 = new Node("22");

childPtr = new ChildrenListNode(ptr20);

nextChildPtr = new ChildrenListNode(ptr21);

ptr10->ptr2ChildrenList = childPtr;

childPtr->ptr2NextChild = nextChildPtr;

childPtr = nextChildPtr;

nextChildPtr = new ChildrenListNode(ptr22);

childPtr->ptr2NextChild = nextChildPtr;

//Create the child node 23,24 for parent node 11

Node* ptr23 = new Node("23");

Node* ptr24 = new Node("24");

childPtr = new ChildrenListNode(ptr23);

nextChildPtr = new ChildrenListNode(ptr24);

ptr11->ptr2ChildrenList = childPtr;

childPtr->ptr2NextChild = nextChildPtr;

ptr20->ptr2ChildrenList = NULL;

ptr21->ptr2ChildrenList = NULL;

ptr22->ptr2ChildrenList = NULL;

ptr23->ptr2ChildrenList = NULL;

ptr24->ptr2ChildrenList = NULL;

return ptr00;

}

BeginningNodeOnList* setupSiblingLink(Node* ptr){

//always point to the tail of the list

BeginningNodeOnList* headPtr2BeginningNodeList = NULL;

BeginningNodeOnList* currPtr2BeginningNodeList = NULL;

if (!ptr)

return headPtr2BeginningNodeList;

else {

queue<Node*> nodeQueue;

queue<Node*> sameLevelNodeQueue;

nodeQueue.push(ptr);

bool firstNode = true;

while(!nodeQueue.empty()){

//pop all elements in the queue to the second queue

while(!nodeQueue.empty()){

sameLevelNodeQueue.push(nodeQueue.front());

nodeQueue.pop();

}

/*Since the sameLevelNodeQueue is not empty here, the first element

must be the beginnng node at each level */

BeginningNodeOnList* ptr2BeginningNodeList =

new BeginningNodeOnList(sameLevelNodeQueue.front());

if (firstNode){

headPtr2BeginningNodeList = ptr2BeginningNodeList;

currPtr2BeginningNodeList = ptr2BeginningNodeList;

firstNode = false;

} else {

currPtr2BeginningNodeList->ptr2NextNodeOnList = ptr2BeginningNodeList;

currPtr2BeginningNodeList = ptr2BeginningNodeList;

}

while(!sameLevelNodeQueue.empty()){

//push all children of the popped nodes to the node queue

Node* currPtr = sameLevelNodeQueue.front();

sameLevelNodeQueue.pop();

ChildrenListNode* ptr2ChildrenList = currPtr->ptr2ChildrenList;

while(ptr2ChildrenList){

if (ptr2ChildrenList->ptr2Child)

nodeQueue.push(ptr2ChildrenList->ptr2Child);

ptr2ChildrenList = ptr2ChildrenList->ptr2NextChild;

}

//Setup the sibling link

if(!sameLevelNodeQueue.empty())

currPtr->ptr2sibling = sameLevelNodeQueue.front();

}

}

return headPtr2BeginningNodeList;

}

}

void printNodeByLevel(BeginningNodeOnList* ptr2List){

if (!ptr2List)

cout<<"The Tree is empty!"<<endl;

else {

int levelNumber =0;

BeginningNodeOnList* currentListNode = ptr2List;

while (currentListNode){

cout<<"The "<<levelNumber<<"-th level node values are:"<<endl;

Node* beginningNode = currentListNode->ptr2BeginningNode;

while (beginningNode){

cout<<beginningNode->nodeValue;

beginningNode=beginningNode->ptr2sibling;

if (beginningNode)

cout<<"->";

}

cout<<endl;

levelNumber++;

currentListNode = currentListNode->ptr2NextNodeOnList;

}

}

}

void freeChildrenListMemory(ChildrenListNode* currChildrenListPtr){

if(!currChildrenListPtr) return;

freeChildrenListMemory(currChildrenListPtr->ptr2NextChild);

delete currChildrenListPtr;

}

void freeTreeMemory(Node* rootPtr){

if (!rootPtr) return;

ChildrenListNode* currChildrenListPtr = rootPtr->ptr2ChildrenList;

if (currChildrenListPtr){

while (currChildrenListPtr){

freeTreeMemory(currChildrenListPtr->ptr2Child);

currChildrenListPtr = currChildrenListPtr->ptr2NextChild;

}

freeChildrenListMemory(rootPtr->ptr2ChildrenList);

}

delete rootPtr;

}

void freeLinkedListMemory(BeginningNodeOnList* ptr2List){

if(!ptr2List) return;

freeLinkedListMemory(ptr2List->ptr2NextNodeOnList);

delete ptr2List;

}

int main(){

Node* rootPtr = createSampleTree();

BeginningNodeOnList* ptr2List = setupSiblingLink(rootPtr);

printNodeByLevel(ptr2List);

//Delete Allocated Memory

freeLinkedListMemory(ptr2List);

freeTreeMemory(rootPtr);

}

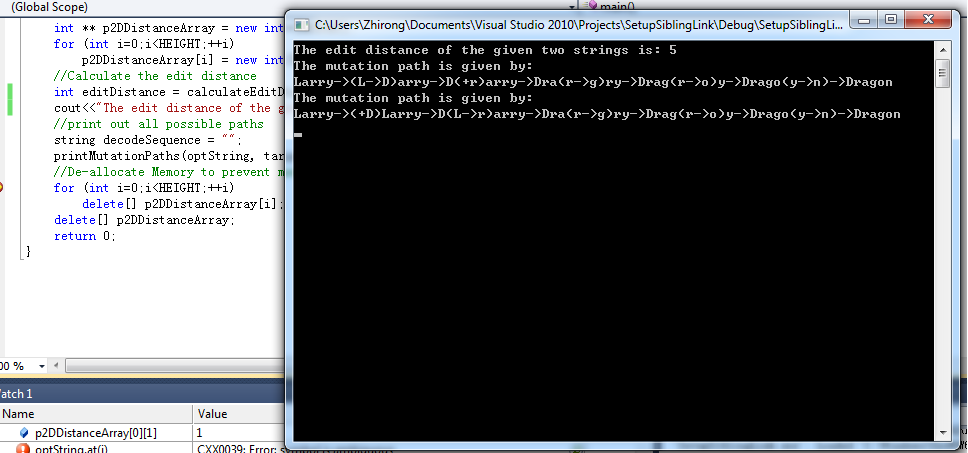

Enumerate all mutation paths using #(Edit Distance) steps

The edit distance between two strings is the minimum number of characters in one string to be updated, inserted, or deleted to get the second string. This is a classic application of dynamic programming to solve this problem. There are many online code examples in calculating this edit distance. However, I was not lucky enough to find an algorithm to print out all such mutation paths using #(edit distance) steps.

I am using recursion to capture all such transition paths. In order to calculate the edit distance, notice that f(i,j) means how many steps at minimum cost are needed to convert the first i characters in the original(optString) to the first j characters in the target (targetString). Suppose the length of original string is I and the length of target string is J. Then i can take values from 0 to I and j can take values from 0 to J. So I create a 2D array of size (I+1)-by-(J+1). Then the following recursion can be established.

f(i,j) =

if optString.at(i-1) == targetString.at(j-1)

f(i,j)=f(i-1,j-1)

else {

f(i,j)= min(f(i-1,j),f(i,j-1),f(i-1,j-1)) +1

}

Have in mind that f(i-1,j) +1 = f(i,j) corresponds to deletion, i.e., the first (i-1) characters can already be converted to the first j characters in target string, then in order to reach f(i,j), the i-th character has to be deleted, then operation to f(i,j) is achieved.

Notice that in an optimized transition, insertion and deletion can not be paired (otherwise it is not the minimum step transition)

The boundary condition is simple to set,

f(0,j) =j, since we have to insert the first j characters from the target string to optString.

f(i,0) =i, since we have to delete the first i characters from the optString.

Then using two for loops to get the edit distance for every f(i,j)

The second part is to print the mutation paths.

The optStringCursor and targetStringCursor always point to the current location of the string. We traverse from the end f(I,J) ( optStringCursor=I-1, targetStringCursor=J-1) see how the optimal path is reached here. If f(i,j) = f(i-1,j)+1 and f(i-1,j-1)+1, that means both deletion and replacement can reach the same destination. So we need to traverse both paths to enumerate all possible paths of such kind.

The base case in the recursion is:

either we backward traverse to some f(i,j)=0, then we can directly start from here for printing or we encounter the case f(i,0) or f(0,j) so we directly delete/insert characters then print out the rest using the decode sequence.

The decodeSequence is used to record how we reach the current f(i,j) from f(I,J), for example,

optString ="la"

targetString = "dis"

the edit distance for the above is 3, and we could have decode sequence (I: insertion, D: deletion, R: replacement, S: skip)

RRI: la->(l->d)a->d(a->i)-> di(+s)->dis

RIR: la->(l->d)a->d(+i)a->di(a->s)->dis

IRR: la->(+d)la->d(l->i)a->di(a->s)->dis

#include <iostream>

#include <string>

#include <algorithm>

using namespace std;

/***************************************************

Author: Larry (Zhirong), Li

March 07,2012@Toronto

Print out all transition paths using edit distance

*****************************************************/

int calculateEditDistance(string optString, string targetString, int HEIGHT, int WIDTH, int** p2DDistanceArray){

for(int i=0;i<HEIGHT;i++)

p2DDistanceArray[i][0] = i;

for(int j=0;j<WIDTH;j++)

p2DDistanceArray[0][j] = j;

for (int i=1;i<HEIGHT;i++){

for(int j=1;j<WIDTH;j++)

{

if (optString.at(i-1) == targetString.at(j-1))

p2DDistanceArray[i][j] = p2DDistanceArray[i-1][j-1];

else {

//f(i,j-1)+1=f(i,j)

int insertion = p2DDistanceArray[i][j-1] + 1;

//f(i-1,j)+1=f(i,j)

int deletion = p2DDistanceArray[i-1][j] + 1;

//f(i-1,j-1)+1=f(i,j)

int replacement = p2DDistanceArray[i-1][j-1] + 1;

p2DDistanceArray[i][j] = min(min(insertion, deletion), replacement);

}

}

}

return p2DDistanceArray[HEIGHT-1][WIDTH-1];

}

void printMutationPaths(string& optString, string& targetString, int optStringCursor, int targetStringCursor,

int** p2DDistanceArray, string decodeSequence){

//base cases

if ((p2DDistanceArray[optStringCursor+1][targetStringCursor+1] == 0) || (optStringCursor+1 == 0)

|| (targetStringCursor+1 ==0) ){

//Print mutation path

cout<<"The mutation path is given by:"<<endl;

cout<<optString;

//There should be #decodeSequence.length() mutation steps

string currMuatedOptString = optString.substr(0, optStringCursor+1);

//Decide which boundary case we are in

if (optStringCursor+1 == 0){

//insert #(targetStringCursor-optStringCursor) characters from targetString

int currCursor = -1;

for (int i=0;i<targetStringCursor-optStringCursor;++i){

cout<<"->"<<currMuatedOptString<<"(+"<<targetString.substr(currCursor+1,1)<<")"

<<optString.substr(optStringCursor+1);

currMuatedOptString = currMuatedOptString + targetString.substr(currCursor+1,1);

currCursor++;

}

}else if (targetStringCursor+1 ==0) {

//delete # (optStringCursor-targetStringCursor) characters from optString

int currCursor = -1;

currMuatedOptString = optString.substr(0, currCursor+1);

for (int i=0;i<optStringCursor-targetStringCursor;++i){

cout<<"->"<<currMuatedOptString<<"(-"<<optString.substr(currCursor+1,1)<<")"

<<optString.substr(currCursor+2);

currCursor++;

}

}

for(unsigned int i=0;i<decodeSequence.length();++i){

switch(decodeSequence.at(i)){

case 'I':

cout<<"->"<<currMuatedOptString<<"(+"<<targetString.substr(targetStringCursor+1,1)

<<")"<<optString.substr(optStringCursor+1);

currMuatedOptString = currMuatedOptString +

targetString.substr(targetStringCursor+1,1);

targetStringCursor++;

break;

case 'D':

cout<<"->"<<currMuatedOptString<<"(-"<<optString.substr(optStringCursor+1,1)<<")"

<<optString.substr(optStringCursor+2);

optStringCursor++;

break;

case 'R':

cout<<"->"<<currMuatedOptString<<"("<<optString.substr(optStringCursor+1,1)

<<"->"<<targetString.substr(targetStringCursor+1,1)<<")"

<<optString.substr(optStringCursor+2);

currMuatedOptString = currMuatedOptString +

targetString.substr(targetStringCursor+1,1);

optStringCursor++;

targetStringCursor++;

break;

case 'S':

currMuatedOptString = currMuatedOptString +

targetString.substr(targetStringCursor+1,1);

optStringCursor++;

targetStringCursor++;

break;

default:

cout << "unknown decoding sequence: terminate";

exit(1);

}

}

cout<<"->"<<targetString<<endl;

}else if(optString.at(optStringCursor) == targetString.at(targetStringCursor)){

printMutationPaths(optString, targetString, optStringCursor-1, targetStringCursor-1,

p2DDistanceArray, "S" + decodeSequence);

}else {

//f(i,j-1)+1=f(i,j)

if (p2DDistanceArray[optStringCursor+1][targetStringCursor+1]

== p2DDistanceArray[optStringCursor+1][targetStringCursor]+1)

printMutationPaths(optString, targetString, optStringCursor, targetStringCursor-1,

p2DDistanceArray, "I" + decodeSequence);

//f(i-1,j)+1=f(i,j)

if (p2DDistanceArray[optStringCursor+1][targetStringCursor+1]

== p2DDistanceArray[optStringCursor][targetStringCursor+1]+1)

printMutationPaths(optString, targetString, optStringCursor-1, targetStringCursor,

p2DDistanceArray, "D" + decodeSequence);

//f(i-1,j-1)+1=f(i,j)

if (p2DDistanceArray[optStringCursor+1][targetStringCursor+1]

== p2DDistanceArray[optStringCursor][targetStringCursor]+1)

printMutationPaths(optString, targetString, optStringCursor-1, targetStringCursor-1,

p2DDistanceArray, "R" + decodeSequence);

}

}

int main(){

string optString ("Larry");

string targetString ("Dragon");

int HEIGHT = optString.length()+1;

int WIDTH = targetString.length()+1;

//Allocate Memory

int ** p2DDistanceArray = new int*[HEIGHT];

for (int i=0;i<HEIGHT;++i)

p2DDistanceArray[i] = new int[WIDTH];

//Calculate the edit distance

int editDistance = calculateEditDistance(optString, targetString, HEIGHT, WIDTH, p2DDistanceArray);

cout<<"The edit distance of the given two strings is: "<<editDistance<<endl;

//print out all possible paths

string decodeSequence = "";

printMutationPaths(optString, targetString, optString.length()-1, targetString.length()-1,

p2DDistanceArray,decodeSequence);

//De-allocate Memory to prevent memory leak

for (int i=0;i<HEIGHT;++i)

delete[] p2DDistanceArray[i];

delete[] p2DDistanceArray;

return 0;

}

Calculate # visited points on an infinite chessboard

This is not the Knight's tour problem (Hamiltonian Cycle problem). Indeed, one needs to find out how many positions (including the start point) a knight/horse can jump to after 10 moves. :) The code answer is 1345, within the 41-by-41 search range.

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.Iterator;

import java.util.List;

import java.util.Set;

import org.apache.commons.lang3.builder.EqualsBuilder;

import org.apache.commons.lang3.builder.HashCodeBuilder;

/**

* imagine an infinite chess board. If the horse jumps from the origin, how many

* distinct positions can the horse possibly visit after 10 moves.

* @author Larry, Li March 9, 2012 @ Toronto

*

*/

public class ComputeHorseJumps {

class Coordinate {

private int x;

private int y;

public Coordinate(int x, int y) {

this.x = x;

this.y = y;

}

public int getX() {

return x;

}

public int getY() {

return y;

}

public void setX(int i) {

x = i;

}

public void setY(int i) {

y = i;

}

public boolean equals(Object obj) {

if (obj == null)

return false;

if (obj == this)

return true;

if (obj.getClass() != getClass())

return false;

Coordinate other = (Coordinate) obj;

return new EqualsBuilder().append(this.getX(), other.getX())

.append(this.getY(), other.getY()).isEquals();

}

public int hashCode() {

return new HashCodeBuilder().append(this.getX()).append(this.getY()).toHashCode();

}

}

public static void main(String[] args) {

int numberOfMoves=0;

String line = null;

ComputeHorseJumps classInstance = new ComputeHorseJumps();

try {

BufferedReader is = new BufferedReader(new InputStreamReader(System.in));

line = is.readLine();

numberOfMoves = Integer.parseInt(line);

} catch (NumberFormatException ex) {

System.err.println("Not a valid number: " + line);

} catch (IOException e) {

System.err.println("Unexpected IO ERROR: " + e);

}

int numberOfDistinctPoints = calculateNumberOfDistinctPoints(numberOfMoves, classInstance);

System.out.println("The total number after " + numberOfMoves + " move(s) is " + numberOfDistinctPoints);

}

/**

* @param numberOfMoves

* @param classInstance

* @return

*/

private static int calculateNumberOfDistinctPoints(int numberOfMoves, ComputeHorseJumps classInstance) {

// TODO Auto-generated method stub

Coordinate startingPoint = classInstance.new Coordinate(0,0);

Set<Coordinate> visitedPoints = new HashSet<Coordinate>();

visitedPoints.add(startingPoint);

//create a processing queue

List<Coordinate> processQueue = new ArrayList<Coordinate>();

List<Coordinate> newProcessQueue = new ArrayList<Coordinate>();

processQueue.add(startingPoint);

for(int i=0;i<numberOfMoves;i++){

newProcessQueue.clear();

for(Iterator<Coordinate> iter = processQueue.iterator(); iter.hasNext();){

Coordinate currentPoint = (Coordinate) iter.next();

int xCoordinate = currentPoint.getX();

int yCoordinate = currentPoint.getY();

// traverse 8 cases

putIntoSetAndList(xCoordinate+1, yCoordinate+2, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate-1, yCoordinate+2, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate-2, yCoordinate+1, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate-2, yCoordinate-1, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate-1, yCoordinate-2, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate+1, yCoordinate-2, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate+2, yCoordinate-1, visitedPoints, newProcessQueue, classInstance);

putIntoSetAndList(xCoordinate+2, yCoordinate+1, visitedPoints, newProcessQueue, classInstance);

}

processQueue.clear();

processQueue.addAll(newProcessQueue);

}

return visitedPoints.size();

}

private static void putIntoSetAndList(int xCoordinate, int yCoordinate, Set<Coordinate> visitedPoints,

List<Coordinate> newProcessQueue, ComputeHorseJumps classInstance) {

// TODO Auto-generated method stub

Coordinate newPoint = classInstance.new Coordinate(xCoordinate, yCoordinate);

if (!visitedPoints.contains(newPoint)){

visitedPoints.add(newPoint);

newProcessQueue.add(newPoint);

}

}

}

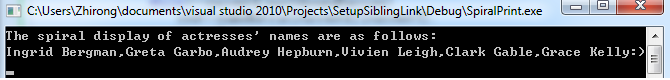

Spiral Print of Actresses (Except for Clark Gable)Names

The purpose of this problem is to clockwise spirally print out the names. These names are fairly well-known if you love movies of old time.

#include <iostream>

using namespace std;

/****************************************************

* Spiral print of movie stars' names

* Author@Larry, Zhirong Li on March 16, 2012

* Toronto

***************************************************/

const int columnWidth = 10;

const int rowHeight = 8;

static char nameMatrix[rowHeight][columnWidth] =

{ {'I','n','g','r','i','d',' ','B','e','r'},

{'e','y',' ','H','e','p','b','u','r','g'},

{'r','C','l','a','r','k',' ','G','n','m'},

{'d',',','e',' ','K','e','l','a',',','a'},

{'u','h','c',')',':','y','l','b','V','n'},

{'A','g','a','r','G',',','e','l','i',','},

{',','i','e','L',' ','n','e','i','v','G'},

{'o','b','r','a','G',' ','a','t','e','r'}

};

void spiralPrint(){

// initialize the value of 4-way barriers

int eastboundBarrier = columnWidth;

int southboundBarrier = rowHeight;

int westboundBarrier = -1;

int northboundBarrier = 0;

int currentX = 0;

int currentY = 0;

cout<<"The spiral display of actresses' names are as follows:"<<endl;

cout<<nameMatrix[currentX][currentY];

while(true){

// heading east

if (currentY == eastboundBarrier-1 ){

break;

} else {

while(++currentY<eastboundBarrier)

cout<<nameMatrix[currentX][currentY];

eastboundBarrier--;

}

currentY--;

// heading south

if (currentX == southboundBarrier-1){

break;

} else {

while(++currentX<southboundBarrier)

cout<<nameMatrix[currentX][currentY];

southboundBarrier--;

}

currentX--;

// heading west

if (currentY == westboundBarrier+1){

break;

} else {

while(--currentY>westboundBarrier)

cout<<nameMatrix[currentX][currentY];

westboundBarrier++;

}

currentY++;

// heading north

if (currentX == northboundBarrier+1){

break;

} else {

while(--currentX>northboundBarrier)

cout<<nameMatrix[currentX][currentY];

northboundBarrier++;

}

currentX++;

}

}

int main(int argc, char* argv[]){

spiralPrint();

return 0;

}

Quicksort On Singly Linked List

You are not allowed to change the node values of a singly linked list of integers. You are also required to use O(1) space to sort this list. Quicksort is the best candidate. One important thing that should get your attention is that you can only manipulate the pointers and the header pointer could be changed. Thus pointer to the pointer to the list is passed in.

#include <iostream>

#include <cstdlib>

#include <time.h>

using namespace std;

/**************************************************************************************************

* Author@Larry, Zhirong Li March 20, 2012 in Toronto

***************************************************************************************************

* Assume you are given a list of integers with node definition

* typedef struct Node{

* const int nodeValue;

* Node * pNextNode;

* Node(int value=0, Node* ptr=NULL): nodeValue(value),pNextNode(ptr){}

* }SinglyLinkedListNode;

*

* You are only allowed to use O(1) space, how would you sort out this list in ascending order

*

* Refer to the following partition logic in the quicksort algorithm

*

* The Core of the Partition Algorithm

* int i = left - 1;

* int j = right;

* while (true) {

* while (less(a[++i], a[right])) // find item on left to swap

* ; // a[right] acts as sentinel

* while (less(a[right], a[--j])) // find item on right to swap

* if (j == left) break; // don't go out-of-bounds

* if (i >= j) break; // check if pointers cross

* exch(a, i, j); // swap two elements into place

* }

* exch(a, i, right); // swap with partition element

* return i;

*************************************************************************************************/

typedef struct Node{

const int nodeValue;

Node * pNextNode;

Node(int value=0, Node* ptr=NULL): nodeValue(value),pNextNode(ptr){}

}SinglyLinkedListNode;

Node* AdvancePointerToGivenIndexNode(Node ** prevPointer, Node** currPointer, int index, Node ** p2pHeader){

*prevPointer = NULL;

*currPointer = *p2pHeader;

for(int i=0;i<index;i++){

*prevPointer = *currPointer;

*currPointer = (*currPointer)->pNextNode;

}

return *currPointer;

}

bool less(Node* leftOprand, Node* rightOprand){

return (leftOprand->nodeValue<rightOprand->nodeValue);

}

void exch(Node* p2PrevBigNode, Node* p2BigNode, Node* p2PrevSmallNode, Node* p2SmallNode){

// pHeader->(3)->(2)->(1) case: swap (3) and (1)

if (p2PrevBigNode)

p2PrevBigNode->pNextNode = p2SmallNode;

// pHeader->(2)->(1) case: swap (2) and (1)

if (p2PrevSmallNode != p2BigNode)

p2PrevSmallNode->pNextNode = p2BigNode;

Node* tempNode = p2SmallNode->pNextNode;

if (p2SmallNode!=p2BigNode->pNextNode)

p2SmallNode->pNextNode = p2BigNode->pNextNode;

else

p2SmallNode->pNextNode = p2BigNode;

p2BigNode->pNextNode = tempNode;

}

int partition(Node ** p2pHeader, int firstElement, int lastElement){

int i = firstElement -1;

int j = lastElement;

Node* p2PreviousNodeOfFirstBigElement, *p2FirstBigElement,*p2PreviousNodeOfFirstSmallElement,

*p2FirstSmallElement;

Node* p2LastNode = *p2pHeader;

for(int k=0;k<lastElement;k++){

p2LastNode = p2LastNode->pNextNode;

}

while(true){

// find item on left to swap

//Important note: The linked list keeps changing so have to scan from header for (i+1)th element

// and that is the reason to use ** p2pHeader to point to the start position all the time

while (less(AdvancePointerToGivenIndexNode(&p2PreviousNodeOfFirstBigElement,&p2FirstBigElement,

++i,p2pHeader), p2LastNode));

// find item on right to swap

while (less(p2LastNode,AdvancePointerToGivenIndexNode(&p2PreviousNodeOfFirstSmallElement,

&p2FirstSmallElement,--j,p2pHeader))){

// don't go out-of-bounds

if (j == firstElement)

break;

}

// check if pointers cross

if (i >= j)

break;

//exch(i, j)

exch(p2PreviousNodeOfFirstBigElement, p2FirstBigElement, p2PreviousNodeOfFirstSmallElement,

p2FirstSmallElement);

if (i==0) *p2pHeader = p2FirstSmallElement;

}

if (i!=lastElement){

AdvancePointerToGivenIndexNode(&p2PreviousNodeOfFirstSmallElement,&p2FirstSmallElement,lastElement,

p2pHeader);

//exch(i,lastElement)

exch(p2PreviousNodeOfFirstBigElement, p2FirstBigElement,p2PreviousNodeOfFirstSmallElement,

p2FirstSmallElement);

if (i==0) *p2pHeader = p2FirstSmallElement;

}

return i;

}

void quickSortOnSinglyLinkedList(Node ** p2pHeader, int firstElement, int lastElement){

if (firstElement>=lastElement)

return;

int pivotPosition = partition(p2pHeader, firstElement, lastElement);

quickSortOnSinglyLinkedList(p2pHeader,firstElement, pivotPosition-1);

quickSortOnSinglyLinkedList(p2pHeader,pivotPosition+1, lastElement);

}

int main(int argc, char ** argv){

const unsigned int QUEUELENGTH = 100;

//initialize the seed of the PRNG

srand(time(NULL));

//Create a singly linked list

Node* pHeader = NULL;

Node* pLastNode = NULL;

for(unsigned int i=0;i<QUEUELENGTH;i++){

Node* pNewNode = new Node(rand()%1000,pLastNode);

pLastNode = pNewNode;

}

pHeader = pLastNode;

//Debug: Print out the list

cout<<"The values in the list are: "<<endl;

while (pLastNode){

cout<<pLastNode->nodeValue<<" ";

pLastNode = pLastNode->pNextNode;

}

cout<<endl;

quickSortOnSinglyLinkedList(&pHeader,0,QUEUELENGTH-1);

//Debug: Print out the list after the sorting process

cout<<"The values in the list after sort are: "<<endl;

pLastNode = pHeader;

while (pLastNode){

cout<<pLastNode->nodeValue<<" ";

pLastNode = pLastNode->pNextNode;

}

//De-allocate list memory

pLastNode = pHeader;

while (pLastNode){

Node * pTemp = pLastNode->pNextNode;

delete pLastNode;

pLastNode = pTemp;

}

}

Check if identical BSTs from given number streams

Given two number streams (Assume the numbers are distinct, your task is to find whether they will create the same BST or not. I give a naive approach but in a more OOP framework.

#include <iostream>

using namespace std;

/**************************************************************

* Given two number streams (Assume the numbers are distinct

* your task is to find whether they will create the same BST

* Author@Larry, Zhirong Li on April 4, 2012

* Toronto

*************************************************************/

struct TreeNode{

int _nodeValue;

TreeNode *_leftChild;

TreeNode *_rightChild;

TreeNode(int nodeValue): _nodeValue(nodeValue){

_leftChild = NULL;

_rightChild = NULL;

}

};

class TreeTest{

public:

TreeTest(const int *pValueList =NULL, int length=0):_pRootTree(NULL){

if (length>0){

TreeNode *ptr = new TreeNode(pValueList[0]);

for(int i=1;i<length;++i){

buildBST(ptr, pValueList[i]);

}

_pRootTree = ptr;

}

}

~TreeTest(){

deleteBST(_pRootTree);

}

TreeNode * getTreeRoot(){

return this->_pRootTree;

}

protected:

private:

TreeNode * _pRootTree;

void buildBST(TreeNode * const ptr, const int& nodeValue){

if (nodeValue< ptr->_nodeValue){

if (ptr->_leftChild == NULL){

TreeNode *tmp = new TreeNode(nodeValue);

ptr->_leftChild = tmp;

} else

buildBST(ptr->_leftChild, nodeValue);

} else {

if (ptr->_rightChild == NULL){

TreeNode *tmp = new TreeNode(nodeValue);

ptr->_rightChild = tmp;

} else

buildBST(ptr->_rightChild, nodeValue);

}

}

void deleteBST(TreeNode *ptr){

if (ptr == NULL) return;

deleteBST(ptr->_leftChild);

deleteBST(ptr->_rightChild);

delete ptr;

}

};

bool isBSTEquals(const TreeNode * const ptr1, const TreeNode * const ptr2){

if (ptr1 == NULL && ptr2 == NULL)

return true;

else if (ptr1 != NULL && ptr2 != NULL){

if (ptr1->_nodeValue == ptr2->_nodeValue)

return isBSTEquals(ptr1->_leftChild, ptr2->_leftChild) &&

isBSTEquals(ptr1->_rightChild, ptr2->_rightChild);

else

return false;

} else

return false;

}

int main(int argc, char *argv[]){

static struct {

const int valueList1[5];

const int valueList2[5];

const int length;

const bool equals;

} testCases[] = {

{{10,5,20,15,30},{10,20,15,30,5}, 5, true},

{{10,5,20,15,30},{10,15,30,20,5}, 5, false}

};

for(size_t i=0;i<sizeof(testCases)/sizeof(testCases[0]);++i){

//Assume object always successfully created.

TreeTest * pTreeTest1 = new TreeTest(testCases[i].valueList1, testCases[i].length);

TreeTest * pTreeTest2 = new TreeTest(testCases[i].valueList2, testCases[i].length);

if (isBSTEquals(pTreeTest1->getTreeRoot(), pTreeTest2->getTreeRoot()) != testCases[i].equals){

cout<<"The "<<i<<"-th test failed."<<endl;

delete pTreeTest1;

delete pTreeTest2;

return 1;

}

delete pTreeTest1;

delete pTreeTest2;

}

cout<<"Test succeeds!"<<endl;

}

Check if identical BSTs from given number streams in Java

The same problem as above, this time we don't bother to create the BSTs. The key idea is similar to quicksort except that we keep the relative order of smaller/bigger items with respect to the pivoting element. By doing so, we don't interfere with the creation order.

import java.util.ArrayList;

import java.util.List;

/**

* The same problem as the above.

* @author Larry, Zhirong Li

* Creation Date: Apr 5, 2012

*/

public class TestIdenticalBSTs {

private static boolean is_BST_identical(List<Integer> array1, List<Integer> array2){

if (array1.isEmpty() && array2.isEmpty())

return true;

else if (array1.size() != array2.size())

return false;

else {

//decide the root node is identical

if (!((Integer)array1.get(0)).equals((Integer)array2.get(0)))

return false;

else {

List<Integer> array1LeftSublist = new ArrayList<Integer>();

List<Integer> array1RightSublist = new ArrayList<Integer>();

List<Integer> array2LeftSublist = new ArrayList<Integer>();

List<Integer> array2RightSublist = new ArrayList<Integer>();

// array1.size() = array2.size() here

for(int i=1;i<array1.size();i++){

if (((Integer)array1.get(i)).compareTo((Integer)array1.get(0))<0)

array1LeftSublist.add(array1.get(i));

else

array1RightSublist.add(array1.get(i));

if (((Integer)array2.get(i)).compareTo((Integer)array2.get(0))<0)

array2LeftSublist.add(array2.get(i));

else

array2RightSublist.add(array2.get(i));

}

return is_BST_identical(array1LeftSublist, array2LeftSublist) &&

is_BST_identical(array1RightSublist, array2RightSublist);

}

}

}

public static void main(String[] args) {

//{10,5,20,15,30},{10,20,15,30,5} true

List<Integer> inputStream1 = new ArrayList<Integer>();

inputStream1.add(new Integer(10));

inputStream1.add(new Integer(5));

inputStream1.add(new Integer(20));

inputStream1.add(new Integer(15));

inputStream1.add(new Integer(30));

List<Integer> inputStream2 = new ArrayList<Integer>();

inputStream2.add(new Integer(10));

inputStream2.add(new Integer(20));

inputStream2.add(new Integer(15));

inputStream2.add(new Integer(30));

inputStream2.add(new Integer(5));

boolean isEqual = TestIdenticalBSTs.is_BST_identical(inputStream1, inputStream2);

System.out.println("\"The given two input stream will generate the same BST\" is " +isEqual);

}

}

In place circular shift of character strings.

Rotate a one-dimensional array of n elements to the right by k steps. For instance, with n=7 and k=3, the array {a, b, c, d, e, f, g} is rotated to {e, f, g, a, b, c, d}.

#include <iostream>

using namespace std;

/*****************************************************************************************************

* In place circular shift of character string, we don't bother to use double string reverse approach

* Instead, since we know where each character belongs after the rotation, we can do a chain of replacement

* of length N as compared to 2N changes for double string reverse

* Author@Larry, Zhirong Li on April 05, 2012 in Toronto

****************************************************************************************************/

void circularShift(char * const p2CharArray, int length, int shifts){

int num_shifts = 0;

int curr_position = 0;

char currChar = p2CharArray[curr_position];

while (num_shifts<length){

int target_position = ((curr_position + shifts )%length + length)%length;

char tmpChar = p2CharArray[target_position];

p2CharArray[target_position] = currChar;

currChar = tmpChar;

curr_position = target_position;

num_shifts++;

}

}

int main(int argc, char** argv){

char charArray[] = "veYouILo";

const char resultArray[] = "ILoveYou";

const int n = strlen(charArray);

const int k = 3;

if (n>0){

circularShift(charArray, n, k);

if (strcmp( charArray, resultArray) == 0)

cout<<"Rotation is correctly performed!"<<endl;

else

cout<<"Rotation is not correctly performed!"<<endl;

}

}

Jump Game: Naive Approach (Dijkstra Algorithm)

Given an array of non-negative integers, you are initially positioned at the first index of the array.

Each element in the array represents your maximum jump length at that position.

Your goal is to reach the last index in the minimum number of jumps.

For example:

Given array A = [2,3,1,1,4]

The minimum number of jumps to reach the last index is 2. (Jump 1 step from index 0 to 1, then 3 steps to the last index.)

Notice that this game only contains unidirectional jumps, so there must exist a better solution. Look at above figure illustrating how Dijkstra algorithm works. The worst case complexity is O(|E| + |V| log |V|).

#include <iostream>

#include <vector>

#include <deque>

using namespace std;

/**************************************************************************************************

Given an array of non-negative integers, you are initially positioned at the first index of the array.

Each element in the array represents your maximum jump length at that position.

Your goal is to reach the last index in the minimum number of jumps.

For example:

Given array A = [2,3,1,1,4]

The minimum number of jumps to reach the last index is 2. (Jump 1 step from index 0 to 1, then 3

steps to the last index.)

Author@Larry, Zhirong Li April 7, 2012 in Toronto

Implementation of Dijkstra algorithm: http://en.wikipedia.org/wiki/Dijkstra%27s_algorithm

1 function Dijkstra(Graph, source):

2 for each vertex v in Graph: // Initializations

3 dist[v] := infinity ; // Unknown distance function from source to v

4 previous[v] := undefined ; // Previous node in optimal path from source

5 end for ;

6 dist[source] := 0 ; // Distance from source to source

7 Q := the set of all nodes in Graph ; // All nodes in the graph are unoptimized - thus are in Q

8 while Q is not empty: // The main loop

9 u := vertex in Q with smallest distance in dist[] ;

10 if dist[u] = infinity:

11 break ; // all remaining vertices are inaccessible from source

12 end if ;

13 remove u from Q ;

14 for each neighbor v of u: // where v has not yet been removed from Q.

15 alt := dist[u] + dist_between(u, v) ;

16 if alt < dist[v]: // Relax (u,v,a)

17 dist[v] := alt ;

18 previous[v] := u ;

19 decrease-key v in Q; // Reorder v in the Queue

20 end if ;

21 end for ;

22 end while ;

23 return dist[] ;

24 end Dijkstra.

***************************************************************************************************/

int main(){

const int A[] = {2,3,1,1,4};

// const int A[] = {3,2,1,0,4};

const size_t array_len = sizeof(A)/sizeof(A[0]);

// shortest distance from s

int * dist = new int[array_len];

// previous node along the shortest path

int * prev = new int[array_len];

for (int i=0;i<array_len;++i){

prev[i] = INT_MIN;

dist[i] = INT_MAX;

}

// incidence matrix

int ** p2IncidenceMatrix = new int*[array_len];

for (unsigned int i=0;i<array_len;++i)

p2IncidenceMatrix[i] = new int[array_len];

for (unsigned int i=0;i<array_len;++i)

for (unsigned int j=0;j<array_len;++j)

p2IncidenceMatrix[i][j] = 0;

// upper triangle incidence matrix here

for (int i=0;i<array_len;++i)

for (int j=1;j<=A[i];++j){

if (i+j<array_len)

p2IncidenceMatrix[i][i+j] =1;